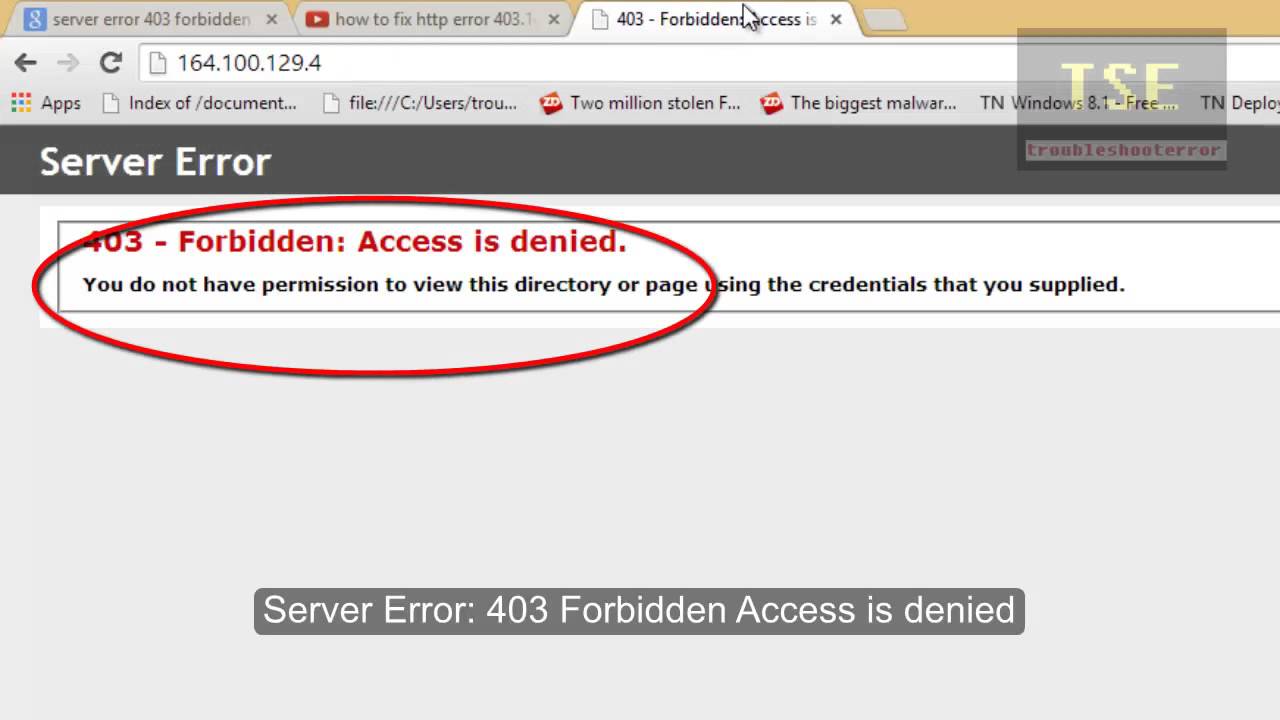

I try to download a file with wget and curl and it is rejected with a 403 error (forbidden).

I can view the file using the web browser on the same machine.

I try again with my browser's user agent, obtained by http://www.whatsmyuseragent.com. I do this:

Powershell Wget Download File

- 'Failed - Forbidden' message when trying to download files off the net? If there's any way to fix this, I would be more than happy to hear it. Update: I looked on the downloads list and found this additional info: 'Access to this resource was forbidden by the server.'

- Jul 13, 2017 - Hello, When I try to download OBS, it starts downloading just fine, but it. Wget '>output 2>error. Anyway, at the end of the file there is the 403 Forbidden error). You can try the torrent download instead.

- 403 Download any torrent client even uTorrent free version. Sign in to add slowly to mouse or keyboard input. Instructions for Windows 8: Hover the cursor in the bottom program installed, we highly recommend using Emsisoft Anti-Malware (download here).

- The status information of wget is always printed to stderr (channel 2). So you can redirect that channel to a file: wget -O - 'some file im downloading' >downloaded_file 2>wget_status_info_file Channel 1 (stdout) is redirected to the file downloaded_file and stderr to wget_status_info_file.

- Is Torrent Project Down? The Pirate Bay And Other Best torrent Alternatives to download free movies and tv shows.

Nov 18, 2012 I have already open/add a 'Torrent File' in my utorrent server. And use utorrent client download this file. When I click torrent file, and click below botton 'Trackers'.

and

Wget Error 5

but it is still forbidden. What other reasons might there be for the 403, and what ways can I alter the wget and curl commands to overcome them?

(this is not about being able to get the file - I know I can just save it from my browser; it's about understanding why the command-line tools work differently)

update

Thanks to all the excellent answers given to this question. The specific problem I had encountered was that the server was checking the referrer. By adding this to the command-line I could get the file using curl and wget.

The server that checked the referrer bounced through a 302 to another location that performed no checks at all, so a curl or wget of that site worked cleanly.

If anyone is interested, this came about because I was reading this page to learn about embedded CSS and was trying to look at the site's css for an example. The actual URL I was getting trouble with was this and the curl I ended up with is

https://golmidnight.netlify.app/download-game-call-of-duty-road-to-victory-ppsspp.html. and the wget is

Very interesting.

5 Answers

A HTTP request may contain more headers that are not set by curl or wget. For example:

- Cookie: this is the most likely reason why a request would be rejected, I have seen this happen on download sites. Given a cookie

key=val, you can set it with the-b key=val(or--cookie key=val) option forcurl. - Referer (sic): when clicking a link on a web page, most browsers tend to send the current page as referrer. It should not be relied on, but even eBay failed to reset a password when this header was absent. So yes, it may happen. The

curloption for this is-e URLand--referer URL. - Authorization: this is becoming less popular now due to the uncontrollable UI of the username/password dialog, but it is still possible. It can be set in

curlwith the-u user:password(or--user user:password) option. - User-Agent: some requests will yield different responses depending on the User Agent. This can be used in a good way (providing the real download rather than a list of mirrors) or in a bad way (reject user agents which do not start with

Mozilla, or containWgetorcurl).

You can normally use the Developer tools of your browser (Firefox and Chrome support this) to read the headers sent by your browser. If the connection is not encrypted (that is, not using HTTPS), then you can also use a packet sniffer such as Wireshark for this purpose.

Besides these headers, websites may also trigger some actions behind the scenes that change state. For example, when opening a page, it is possible that a request is performed on the background to prepare the download link. Or a redirect happens on the page. These actions typically make use of Javascript, but there may also be a hidden frame to facilitate these actions.

If you are looking for a method to easily fetch files from a download site, have a look at plowdown, included with plowshare.

Just want to add to the above answers that you could use the 'Copy as cURL' feature present in Chrome developer tools (since v26.0) and Firebug (since v1.12). You can access this feature right-clicking the request row in the Network tab.

Tried all of the above however no luck; used dev browser tool to get user-agent string, once I added the following, success:

Depending on what you're asking for, it could be a cookie. With Firefox, you can do a right-click when you're on the page in question, 'View Page Info'. Choose 'Security' icon, and then click 'View Cookies' button.

For puzzling out cookies, the Firefox 'Live HTTP Headers' plug-in is essential. You can see what cookies get set, and what cookies get sent back to the web server.

wget can work with cookies, but it's totally infuriating, as it doesn't give a hint that it didn't send cookies. Your best bet is to remove all related cookies from your browser, and go through whatever initial login or page viewing sequence it takes. Look at 'Live HTTP Headers' for cookies, and for any POST or GET parameters. Do the first login step with wget using '--keep-session-cookies' and '--save-cookies' options. That will give you a cookie file you can look at with a text editor. Use wget --load-cookies with the cookie file for the next steps.

Another reason this can happen is if the site requires SSL. Your browser will automatically forward from HTTP to HTTPS but curl and wget will not. So try the request with HTTPS instead of HTTP.

protected by Community♦Sep 10 '16 at 1:59

Thank you for your interest in this question. Because it has attracted low-quality or spam answers that had to be removed, posting an answer now requires 10 reputation on this site (the association bonus does not count).

Would you like to answer one of these unanswered questions instead?

Not the answer you're looking for? Browse other questions tagged wgetcurl or ask your own question.

I tried to download a gigabyted file where I got 200~300 kb/s in my browser but the same link worked in wget over 6MB/s..Is there some magic behind the command?

3 Answers

wget normally works a little bit faster than Chrome because it's a terminal program so there is less resource usage overhead, but that would not explain such a large difference in download speeds between wget and Chrome. You probably have an issue with downloading that large file in Chrome. wget is a better tool than Chrome for downloading large files, if you don't have to solve a captcha in order to download the file.

The discrepancies in speed that you are seeing can easily be explained by variable server load. Although I have seen high rates of throughput with wget, I've also seen relatively low rates. However, I've never seen a discrepancy in speed of the magnitude you mention, so I'm certain there is another variable that you are not considering. My experience tells me that the load on the server or network segment(s) you were using was higher when you got the lower rates and lower when you got the higher ones.

In my case I was attempting to download android studio for windows (1.1GB). After about 1 minute of downloading chrome was reporting 18min to go.

I cancelled that download, logged onto my debian box and used wget, which pulled it in 1m55s.

Download ethernet controller driver for windows 8. Don't get pissed off. After a system reinstallation?

My laptop is over wifi however, the debian box is hard wired to the router.